How DBT was used to convert from Google BigQuery Scheduled Queries

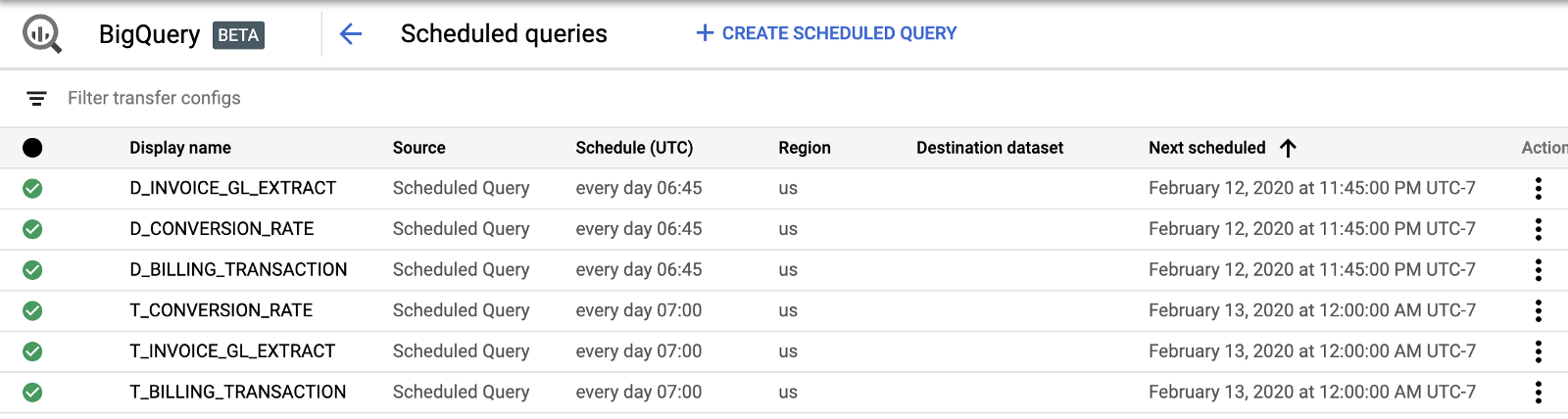

Google BigQuery has neat functionality that allows users to schedule queries in standard SQL, DDL or DML statements. Additionally, you can set them to run on a recurring basis.

This can be great for light transformations; however, if more comprehensive transformation and automated regression testing is needed dbt (data build tool) is an affordable solution.

dbt is a tool that allows engineers to transform their data using select statements, it then creates tables and views for you. It comes with built-in functionality for version control and automated testing for quality assurance. Learn more about dbt here.

Let’s take a deep dive into how dbt works by looking at the following use case. The requirement was to load data into BigQuery from various sources into their own target DataSets. Then lightly transform the data, with consolidation and cleaning, from the target DataSet into a Stage DataSet. Then load the data into a final Star Schema design into an EDW DataSet. Plus, we needed regression testing at each point of transformation into each DataSet to ensure quality.

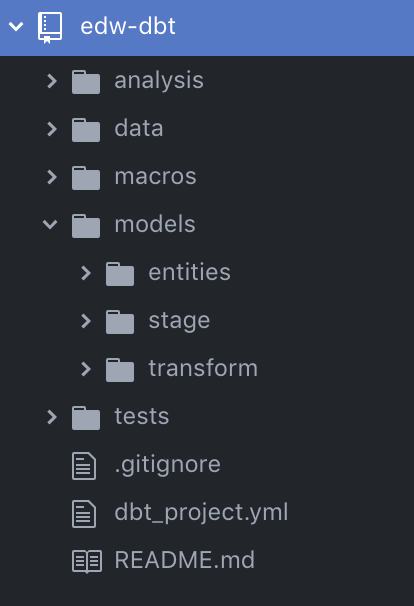

So how does dbt work? dbt is built using a directory of .sql and .yml files. First a project.yml file has to be created. This is where you can define the project details, connection, and file path configurations. Details about creating the project.yml file can be found here.

The next thing dbt needs is Model .sql files where you define simple SQL statements. Depending on what was configured in the project.yml file, dbt will drop and create tables or views or even load data incrementally. There is no need to create individual DDL’s, it does most of the heavy lifting so you can focus on developing.

For this project we organized our model .sqlfiles into stage, transform, and entities folders. The stage folder is where we lightly transformed the data into the Stage DataSet. The data in the Stage DataSet had multiple rows for one ID, as all states of the ID were loaded. To account for this we used .sql files in the transform folder to grab the most recent row.

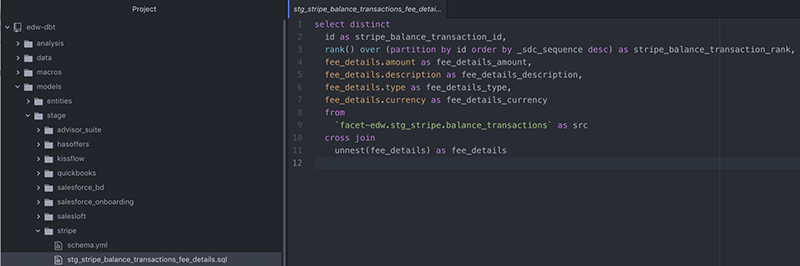

Let’s take a closer look at some of the.sql files within the Stage Folder. This is where we pulled records from the target DataSet and transformed them into the Stage DataSet. Within the stage folder, we are able to further organize the.sql files with subfolders. Expanding the stage and stripe folders, we can see the stg_stripe_balance_transactions.sql file. All we have to do to develop is write a simple SQL statement, dbt does the rest based on the project .ymlfile configuration. It is important to note the name of the file as the name of the file becomes the name of the table in BigQuery DataSet, make sure to name carefully.

Notice we added a rank column in the stg_stripe_balance_transactions.sql file, this is then utilized in the Transform .sqlfiles to grab the most recent record.

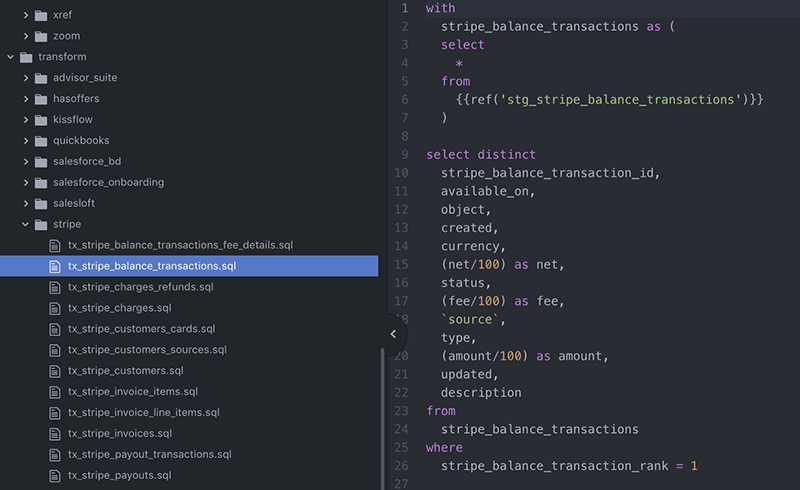

Let’s take a look at the tx_stripe_balance_transactions.sql file from within the transform folder. We are capturing the most recent record by adding to the WHERE stripe_balance_transaction = 1 . Additionally, we are utilizing an alias for stg_stripe_balance_transactions {{ref('stg_stripe_balance_transactions'}} in the tx_stripe_balance_transactions.sql file. dbt will create a relation once the model file is run. By default, dbt uses the filename of the model. More about aliases can be found here.

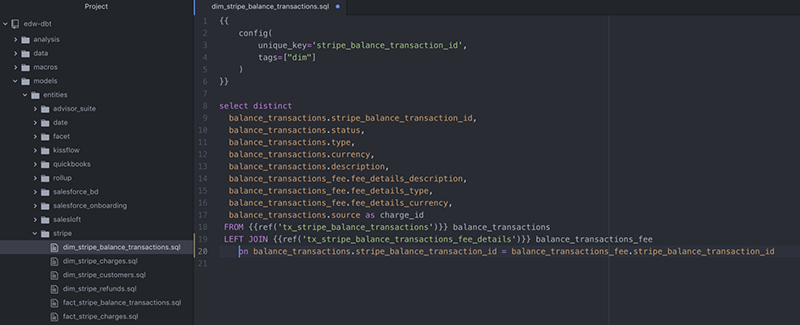

Our last transformation requirement for this project was to transform the data into a Star Schema into an EDW DataSet in BigQuery. This was done within the Entities Folder. Here we defined the Dimension and Fact Tables using a select statement.

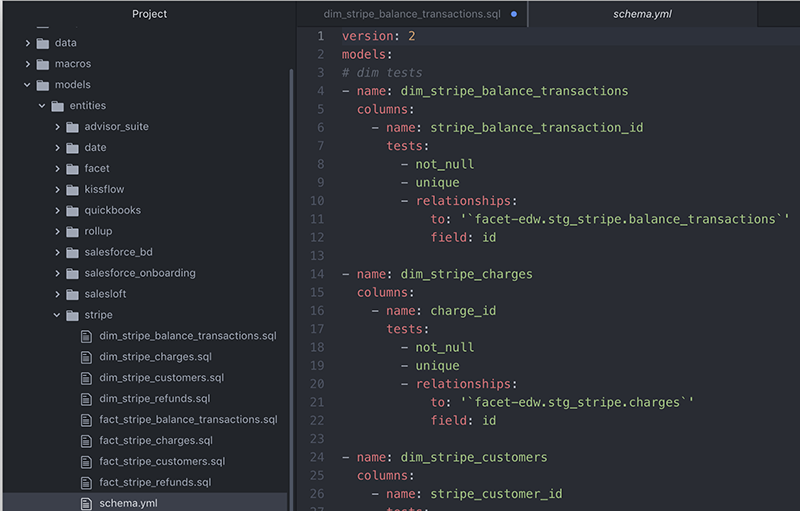

The final and most important requirement for this project was to develop regression testing. dbt has built-in testing functionality which makes it extremely easy to ensure your data is accurate. For each folder you can define specific tests for those .sql files contained within it using a schema.yml file. You can develop schema tests to ensure referential integrity, uniqueness, and not null appropriateness. Within the Schema, you can utilize the alias’ and the dbt syntax which can found here.

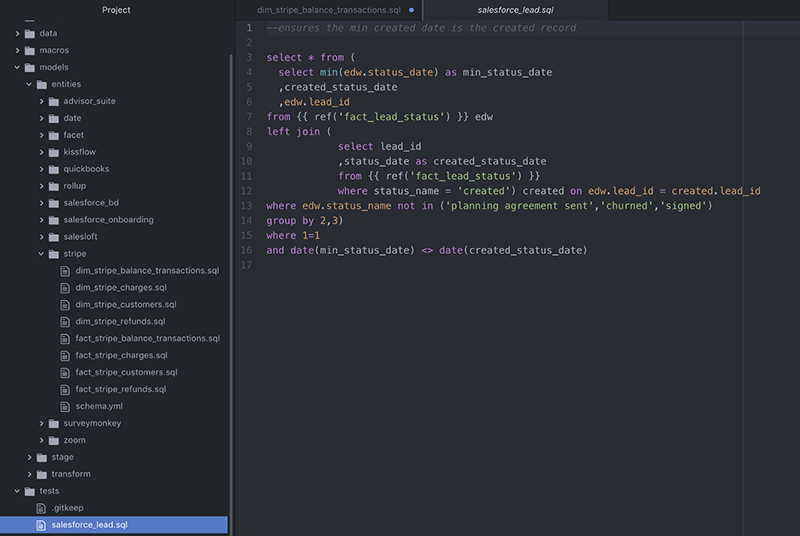

Of course, there is always going to be a unique test required. dbt will not let you down. Using the Tests Folder, you can define select statements that return 0 rows on success, or more than 0 rows on failure. It is necessary to reference the tests folder in dbt project.yml file.

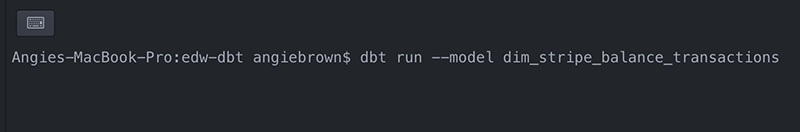

As you develop you will want to run and test your models. We executed this dbt project via Command Line Interface (CLI). Here are some helpful the commands you can use:

- dbt test: executes tests defined in a project. This will execute all test files. To run an individual test file use:

dbt test — — model name - dbt run: runs the models in a project. This will execute all model files. To run an individual test file use:

dbt run— — model name - dbt help: will list the commands available

More commands can be found here.

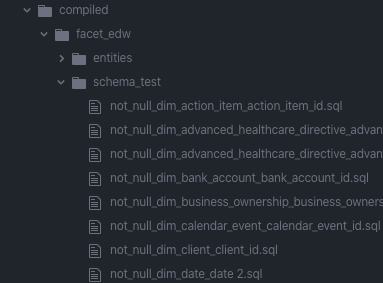

When you run or test, the actual SQL that is run can be found within the dbt target/compiled folder within the project. This is helpful for troubleshooting purposes.

Another built-in feature dbt provides is a documentation website for your project. Once confident with the build you can utilize the website to review the graph lineage of your project. dbt uses the latest version of your project via GitHub, GitLab, BitBucket, etc. The dbt website actually has a documentation website available, so you can go and play with a project. It can be found here.

You will be able to see all of the models that have been built, drill into them, and start exploring. By highlighting a specific model file you will have access to see the Details, Description, Columns, and the source code being used to generate the table.

As you can see, the interface gives you access to all of the information about your project. This allows users to better understand the relationships between models in one place. Additionally you can share links to specific graphs which allows you to collaborate with colleagues. More about how to utilize the documentation website while you are developing can be found here.

While all of this could have been done in BigQuery Scheduled Queries, it would not have been as easy to maintain and update on the fly, nor would it have been version controlled. We were able to create a data warehouse, with the help of dbt, in a clean, easy, efficient and cost-effective manner.

9 Responses to How DBT was used to convert from Google BigQuery Scheduled Queries